11. The Residency postgraduate training system was introduced in 2010. Implementation was carried out in phases, with an incremental increase in the number of specialities being brought into the system. The first phase of residency was rolled out in July 2010 with eight programmes offered, including a Transitional Year residency programme which allowed residents to have a one-year exposure in various clinical disciplines to facilitate the choice of and preparation for a chosen specialty.

12. In July 2011, the second phase was rolled out, comprising 12 programmes. This included anaesthesia, ophthalmology, ENT, orthopaedics, radiology, O&G, and a common-trunk Surgery-in-General leading to training in five surgical sub-specialties. Various internal medicine specialties were included in the third phase in July 2013.

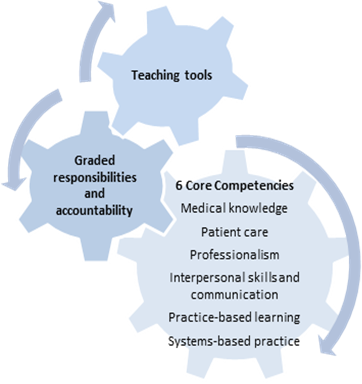

13. There were four key components of graduate medical education which were restructured – curriculum, assessments, people and systems. A structured curriculum was established to achieve a defined set of objectives and core competencies, and residents were given graded responsibilities. Training was provided by designated faculty, and residents had to undergo regular formative assessments. These four components created a comprehensive matrix to ensure the quality of teaching and learning, and therefore, the quality of specialists.

Curriculum

14. The curriculum was contextualised to suit local needs (Figure 2). It detailed how conventional competencies of medical knowledge and patient care should be taught progressively, and visibly demonstrated and practised, so that residents could increase their responsibilities in a stepwise manner. Besides specialty-specific competencies, emphasis was also placed on general competencies, which were increasingly becoming more important.

15. Newer competencies such as professionalism, communication, practice-based learning, scholarly activities and system-based practice were also included in the curriculum.

Figure 2: The Curriculum

Assessment

16. In our prevailing British system, the emphasis was on intermediate and exit summative examinations, which, because of their punitive nature, drove learning. There was insufficient rigour in the training prior to taking the examinations, and training was not monitored or assessed on a regular basis. Formative assessment processes and tools such as mini clinical examinations and in-training examinations were not given specific attention or importance. Hence, there was a disconnect between training and examinations.

17. In addition, the UK membership examinations were usually moderated according to the overall performance of the examination candidates. In contrast, the US Specialty Board examinations were competency based, and not moderated to achieve a pass rate.

18. Under the Residency system, regular formative assessments and examinations were introduced. Trainees were assessed at regular intervals and provided with qualitative feedback. Many of these tools of assessment were psychometrically-validated, enabling international benchmarking. The assessments were also contextualised for local use, and all questions were scrutinised by the local test design workgroup.

People

19. Under the apprenticeship system, teaching was considered an honourable or respectable thing to do, and it was assumed that this would be done with dedication, despite the faculty not being allocated protected time for it. However, due to the increasing workload and the fact that doctors’ incomes were closely tied to service, few senior doctors found the time to devote themselves to teaching or following up on the development of their trainees.

20. Unlike the prior specialist training system, the development of residency programmes involved the separation of the roles of training providers and regulators. Thus, the individual Sponsoring Institutions (SIs) were responsible for the selection of residents and administration of residency programmes. Designated faculty were identified and given protected time to provide oversight of specialty training for the residents.

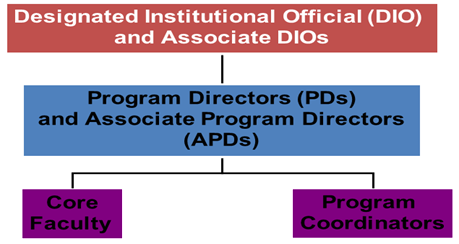

21. Every SI had to ensure that the Designated Institutional Official (DIO), Programme Directors (PD), Core Faculty and Programme Coordinators (Figure 3) appointed by them had sufficient financial support and protected time to effectively carry out his/her educational and administrative responsibilities. This included the resources (e.g. time, space, technology and other infrastructure) to allow for effective administration of the Graduate Medical Education Office. Attention was also given to the training and development of the faculty. In addition, teaching activities were supported by administrative staff, such as the Programme Coordinators. The funding principles for the residency programme are outlined in Annex B.

Figure 3: Key People in Sponsoring Institutions

22. The roles of the DIO were to:

a. Establish and implement policies and procedures (decisions of GMEC) regarding the quality of education and the work environment for the residents in all programmes;

b. Ensure quality of all training programmes;

c. Implement institutional requirements;

d. Oversee institutional agreements;

e. Ensure consistent resident contracts;

f. Develop, implement, and oversee an internal review process

g. Establish and implement procedures to ensure that he/she, or a designee in his/her absence, reviews and co-signs all programme information forms and any documents or correspondence submitted to ACGME and MOH by PDs; and

h. Present an annual report (includes GMEC’s activities during the past year with attention to, at a minimum, resident supervision, resident responsibilities, resident evaluation, compliance with duty-hour standards, and resident participation in patient safety and quality of care education) to the governing body(s) of the SI and major participating sites.

23. The roles of the PD were to:

a. Be responsible for all aspects of the residency training programme;

b. Recruit residents & faculty;

c. Develop curriculum with faculty assistance;

d. Assess residents’ progress through programme;

e. Certify graduates’ competence to practice independently;

f. Ensure residents are provided with a written agreement of appointment/contract outlining the terms and conditions of their appointment to a programme;

g. Ensure residents are informed of and adhere to established educational and clinical practices, policies, and procedures in all sites to which residents are assigned; and

h. Administer and maintain an educational environment conducive to educating residents in each ACGME competency area.

24. The roles of the Core Faculty were to:

a. Devote sufficient time to an educational programme to fulfil their supervisory and teaching responsibilities and to demonstrate a strong interest in the education of residents;

b. Administer and maintain an educational environment conducive to educating residents in each of the competency areas; and

c. Establish and maintain an environment of inquiry and scholarship with an active research component.

Systems

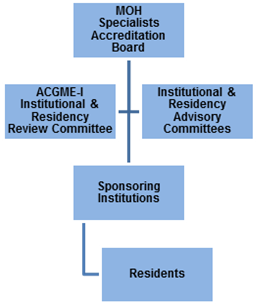

25. A system of supporting organisational structures was put in place to provide the necessary checks and balances to ensure that residents were having the desired learning experience, and that teaching was conducted regularly and in accordance with plans (Figure 4).

Figure 4: Organisational Structure

26. Both internal and external reviews were carried out on a regular basis so that improvements in the system could be made. Internally, each SI was required to form the Graduate Medical Education Committee (GMEC), which was responsible for establishing and implementing policies and procedures regarding the quality of education and the work environment for the residents in all programmes.

27. Residency Advisory Committees (RACs) were appointed by the SAB to oversee the respective specialties and TY training programmes. The roles of the various RACs were:

a. To develop the various specialties taking into consideration the healthcare needs of the nation;

b. To organize the In-Training Exams (ITEs) annually for the specialties and review the results of ITEs to improve the specialty training programme;

c. To work with the SAB, Joint Committee on Specialist Training (JCST) and MOH in matters pertaining to the specialty training and to assist in the selection and interview of residents/trainees;

d. Advise MOH and ACGME-I where necessary or requested for the standards, programme structure and curriculum for the specialties for Singapore;

e. To conduct accreditation site visits when necessary;

f. To work with the institutions, DIOs and PDs to ensure that the programmes comply with the requirements and standards set by ACGME-I and the local RAC, in consultation with MOH;

g. To advise, together with the specialty’s panel of subject matter experts for examinations, on the necessary improvements for assessments of residents; and

h. To conduct / oversee examinations or evaluations (American Board of Medical Specialties International (ABMS-I) or equivalent) for residents, based on the format approved by SAB/JCST.

28. The composition of the GMEC included the following:

- All PDs

- Associate DIOs

- Representative Associate PD from major participating sites

- Peer selected resident representatives

- Institutional Coordinator

- Representative from Human Resource

- Representative from the Undergraduate Medical School

29. In addition, the GMEC consisted of the following Sub-Committees:

- Resident Welfare Sub-Committee

- Curriculum Sub-Committee

- Evaluation Sub-Committee

- Internal Review Sub-Committee

- Faculty Development Sub-Committee

- Research Sub-Committee

- Work Improvement Sub-Committee

30. The GMEC was also required to develop, implement, and oversee an internal review process by forming an internal review committee for each programme.

31. In addition to the institutional education committees, external reviews were conducted by the ACGME’s residency review committee. As the accrediting body, the review committee conducted regular audits on the integrity of the institutions’ systems and the volume and quality of the teaching processes.

Back to Top

IMPLEMENTATION